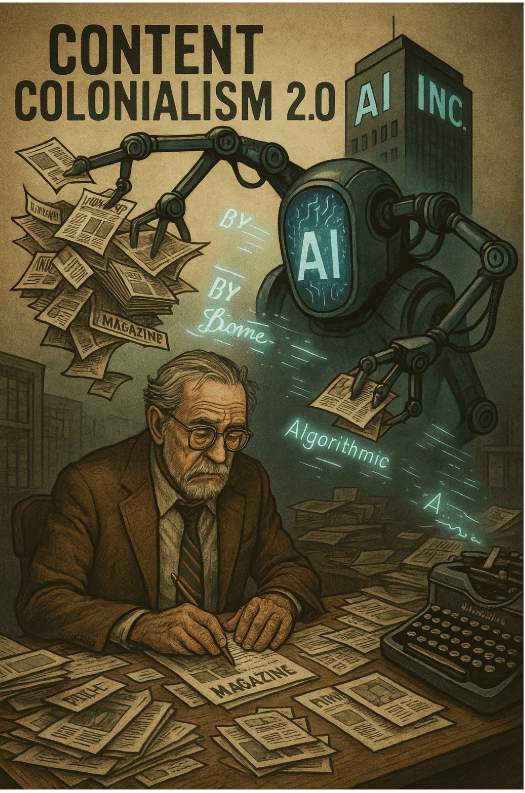

BoSacks Speaks Out: Content Colonialism 2.0 , The Great AI Land Grab Is On

By Bob Sacks

Sat, Jul 5, 2025

Tim O’Reilly doesn’t deal in cheap metaphors. So when he declares that:

“AI has adopted colonialism as its business model: Extract resources from others and use it to enrich yourself and your customers at the expense of those whose resources you have taken, without giving much back,” He’s not being poetic. He’s being precise. Source: https://lnkd.in/gpsqf9wr

For those of us in magazine publishing , whether B2B or B2C, monthly or minute-by-minute, ink-stained or pixel-polished, this isn’t a metaphor. It’s the new operational reality. What we’re witnessing isn’t evolution. It’s a quiet occupation. An algorithmic land grab, conducted at scale, beneath the radar of the average reader, and often with the passive consent of distracted regulators.

Here’s the uncomfortable truth: AI didn’t teach itself. It wasn’t raised on open-source Wikipedia entries and math textbooks alone. It fed ravenously on the back catalogues of our industry. The deep archives of reported features. The artfully curated how-tos. The niche expertise. The reviews, the recaps, the recipes. The meticulously fact-checked, edited, headlined, subheaded, SEO-honed output of professional publishing teams. Years, sometimes decades, of editorial investment, consumed in seconds, digested into statistical weights, and now repackaged as “original AI output.”

Let’s stop calling it disruption. Let’s start calling it what it is: digital extraction.

And like the colonialism of the past, the spoils flow one way, up the stack. Trillion-dollar firms get smarter, faster, and richer. Meanwhile, the people who created the original work, the reporters, editors, researchers, photographers, illustrators, designers, are left paying the production bills while bots munch their output and spit it back out as monetized mimicry.

Need proof?

The New York Times is suing OpenAI and Microsoft for allegedly using millions of its articles, without permission, to train GPT. Not blogspam. Not forum posts. Pulitzer-level investigative journalism. The kind of reporting that takes weeks or months to produce and seconds to synthesize. (What happens when you teach a machine to write like the Times, then charge people to bypass the Times altogether? That’s the core of the case.)

In 2023, CNET, once a trusted tech review source, secretly ran dozens of AI-generated stories, some riddled with errors, and all published under ambiguous bylines. Gizmodo and other G/O Media properties followed suit, only to be caught by readers when contradictions, hallucinations, and inaccuracies stacked up.

Meanwhile, entire “magazine” sites are now springing up, fully AI-generated, complete with fake bylines, scraped mastheads, and copycat design. Some use AI-generated faces for their editorial staff. Others mimic the voice and layout of established brands. And advertisers? They don’t care. They just want reach and ROI. (That’s a rant for another day, but it’s part of the same disease.)

This is not traditional plagiarism. It’s plagiarism at industrial scale, turbocharged by opacity, and cloaked in the seductive language of “innovation.”

Now let’s flip the metaphor:

What if someone scraped Spotify’s full catalog, trained a model on Beyoncé, Springsteen, and Kendrick, and dropped an album of AI-generated songs under a new brand? How long before the lawsuits landed?

Or imagine a bot trained on the entire archive of Supreme Court opinions, now producing “new” legal arguments in the style of Gorsuch, Kagan, and Scalia. Would the legal world shrug? Of course not. There’d be a constitutional meltdown.

So why are publishers expected to take it on the chin? Why is our work, our voice, our authority, our identity, up for grabs without negotiation?

Because we’ve been too quiet, too fragmented, too grateful to be included in the “future of content.”

That ends now.

What we need, urgently, is a coordinated counter-offensive:

Enforceable licensing frameworks: If our content trains your AI, you pay. Full stop. Usage without consent is not innovation, it’s IP theft. Groups like The Magazine Coalition are already working on this. This would be a good place to start your research into compensation possibilities.

Transparency by design: AI-generated content must be labeled. Clearly. Unambiguously. None of this “you can find it in the metadata” nonsense. If it was machine-made, the audience has a right to know.

Coalitions over silos: Let’s stop pretending fragmentation is a strategy.

Publishing, across format, continent, and category, has spent the better part of a century building walls: editorial walls, tech walls, ego walls. Each outlet fortified its turf, guarding audience, data, style, and revenue models like Cold War secrets. It made sense, once. But in a world where algorithms eat distribution and platforms decide visibility, isolation isn’t protection. It’s suicide.

We need to grow up. Fast.

Because the future doesn’t belong to the siloed. It belongs to the aligned, to those with shared standards, interoperable protections, and enough collective mass to shape the terms of engagement. That means journalists. Editors. Designers. Ad ops. Product leads. Tech developers. All under one damn legal tent.

“We can’t license fairly, defend content rights, or negotiate AI guardrails if we show up to the table in 1,000 separate pieces.”

This isn’t a soft call for community. It’s a hard demand for coalition.

Cross-border. Cross-sector. Cross-format. Print and digital. Consumer and trade. Human and machine-assisted. We need a unified publishing front, one loud enough to influence policy and resilient enough to weather platform-induced whiplash.

Yes, dismantling turf wars will be painful. But our only alternative is irrelevance at scale.

The old walls won’t save us. Only collective muscle might.

Policy intervention: Regulators need to wake up. If a tech company can build a billion-dollar tool using our words, our formats, and our ideas, with no consent or compensation, then copyright law is not broken. It’s obsolete.

Because make no mistake: if we don’t assert the value of our work now, loudly, collectively, and with conviction, others will define it for us. And they’ll define it as free. Worse than free: invisible.

This is not an anti-technology screed. You know me better than that. I’m no nostalgic purist pining for hot wax typesetters and rotary presses. I’ve spent five decades running toward the future and occasionally predicting it. But progress without attribution? Scale without ethics? Value creation without compensation? That’s not evolution, that’s exploitation with a slick UX and a cloud API.

Yes, AI is here to stay. But exploitation is not a foregone conclusion. That’s still a fight we can win, if we choose to fight it.

We must organize, license, negotiate, and if necessary, litigate. We must stop treating “free content” as a growth strategy and start treating it as the slow bleed that it is. We must demand transparency, build alliances, and refuse to be the invisible fuel that powers someone else’s valuation.

Because if we don’t? Then we’re not storytellers anymore. We’re not editors or curators or culture-makers.

We’re just data. The raw material. The uncredited, unpaid labor force of the algorithmic empire.

And history tells us what happens to those who remain silent in the face of extraction.

The platforms won’t wait. The algorithms won’t pause.

The only question left is: Will we? Because if we don’t? Then we’re not just losing revenue.

We’re losing authorship. We’re losing identity. We’re losing the cultural authority we spent generations building, handed over, line by line, to machines that don’t create, but copy. To platforms that don’t credit but consume.

We won’t be the publishers anymore. We’ll be the plundered.

Our headlines will become metadata. Our archives will become training fodder.

Our voices, flattened, sampled, and sold back to the public with no trace of the hands that crafted them.

This isn’t a future we can “adapt” to. It’s one we must resist, reshape, and reclaim, now.

Because once our work becomes indistinguishable from the noise, once our authority is absorbed into the synthetic fog, we won’t just be disintermediated. We’ll be erased.

The great AI land grab is on. And if we don’t draw the lines, plant our flags, and defend the value of human-made media, no one else will.

The machines aren’t coming. They’re already here. The question isn’t whether they’ll take everything. The question is what we’re willing to fight for.

This is our industry.

This is our moment.

Let’s not give it away.